Perceptually Motivated Video Sonification

What is sonification?

Sonification is the act of expressing in sound that which is inaudible. For instance, metal detectors use sound to display changes in magnetic fields.

Why sonification?

There are a number of advantages to using sonification. For example, certain types of patterns are easier to pick up by the ear than by the eye and using sound to display information does not obscure the field of view. However, there are also interesting creative possibilities to sonification. Art can be thought as the search for new forms and the translation of forms from one dimension to another can be a useful method to achieve this.

I have chosen to work with images and video because they can contain vast amounts of varied data. Using brushes or cameras, one can seek to capture the forms and beauty of the external world. Using largely the same tools in the same manner, but instead of the eye, displaying these forms to the ear, I seek a new way to appreciate and express their beauty. Furthermore, since sound and music can only exist in time, it was natural to first focus my efforts on video images.

Perceptually Motivated Sonification

There have already been a number of attempts at sonifiying images, from tools to assist sight-impaired people to artistic uses. However, in most cases there is very little apparent relationship between the resulting sounds and the images used. For this reason, the purpose of this research is to try and find ways in which image sonification can be carried out by creating perceptual links between image and sound.

How?

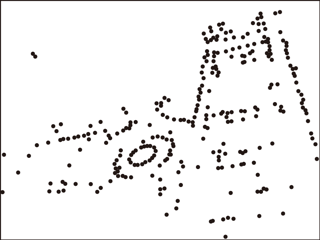

Complex images usually contain “feature points.” For example, a plain white wall lacks any feature that the eye can cling on to but the corners of a door or a window in that wall can be easily and precisely identified. These feature points play a very important role not only in human perception but also in computer vision and image analysis. From a limited data set of features, it is possible to describe the structure of a complex image. Furthermore, once image features have been identified it is possible to track their position over time and thus know in what way the objects in the image are moving or changing.

Just as a complex image can be described using a set of very simple features, complex sounds can be synthesized by adding together several simple sounds. If we assign the control of one of those simple sonic components to a single moving image feature, we can achieve “motion flow field sonification.” If we look at an image showing only the position of the feature points we nevertheless see lines and circles and various other shapes. However, since there are only points in the image, we must conclude that those shapes are in fact the result of our brain’s interpretation of this image. Gestalt psychology gives some explanations as to how simple objects group together to form perceptual objects. The Gestalt rules that describe these groupings can also be applied to the perception of sound. Hence, it should be possible to achieve a level of resemblance between an image and its sonification by taking care to conserve the perceptual relationships between the visual features when translating them to sound.

In practice, the “simple sounds” can be almost anything. Since there is no single correct way to sonify an image artistically, the choice of the precise type of sound to use is left to the creator.

1 Comment

Hi Jean-Marc,

I’m reading your blog while downloading latest release of cv.jit.

Here is a similar example I made (with some video shot in Japan as well), without cv.jit, but basic video -> fft mapping.

http://www.gregbeller.com/2013/05/sonification/

Looking forward using (once again) cv.jit in a new project.

Thanks for that !

1 Trackback